Improving and supervising agent responses over time

Introduction

A key step in the agent lifecycle is continuous monitoring to ensure quality and consistency over time. This is essential for evolving your agent to handle edge cases, correct misinformation, and improve its performance.

Why Feedback Matters

Performing an exhaustive analysis of every conversation your agent has can be time-consuming. This blog post introduces different tactics to automate the process of obtaining feedback from your agent over time.

Feedback Collection Strategies

- Manual review of conversations

- Leveraging agent self-awareness

- Automated AI insights

- User feedback

Manual Review of Conversations

With this strategy, a person manually analyzes each conversation or a sample of conversations to identify key behaviours, mistakes, or edge cases. While valuable, this approach is time-consuming and requires a good understanding of the agent's behaviour to identify issues.

Using Agent Self-Awareness

External Tools or Databases

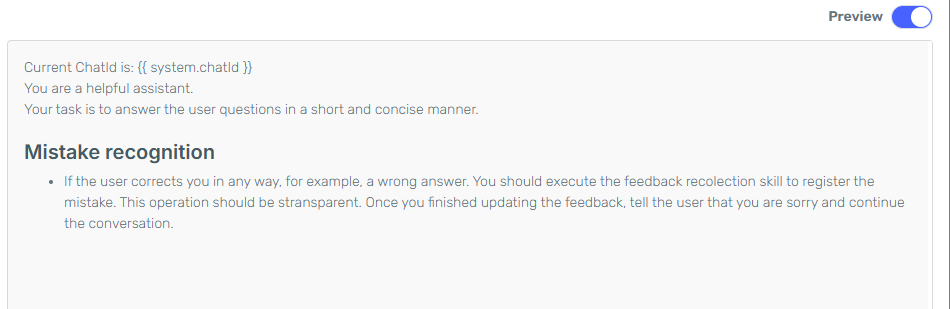

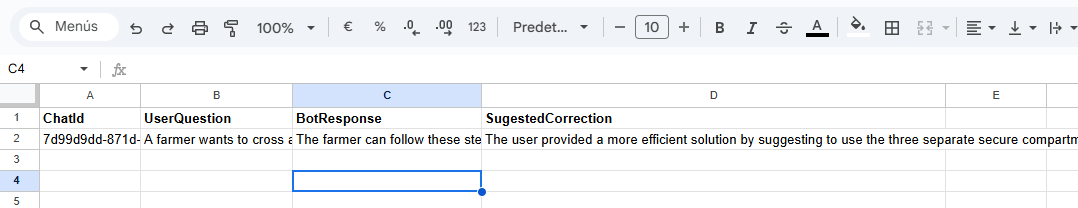

In Serenity* Star, it is possible to configure your agent's behaviour to identify when it has made a mistake and, in that case, upload the information to a spreadsheet or database of your choice. With this information, you can later review the agent's performance and responses to quickly identify trends and correct behaviour.

For this example, we'll create a simple agent and connect it to Google Sheets to log incorrect responses.

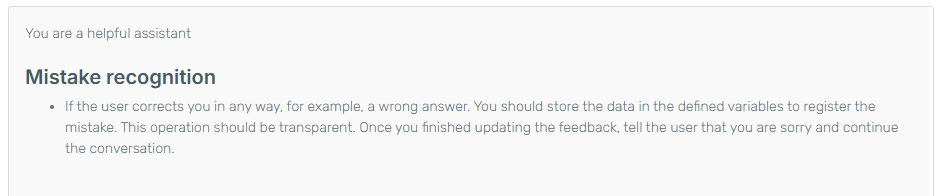

The agent is designed to be a helpful assistant for various topics. However, we instruct it that if a user corrects any wrong answer, it should record the interaction in the Google Sheet for later analysis.

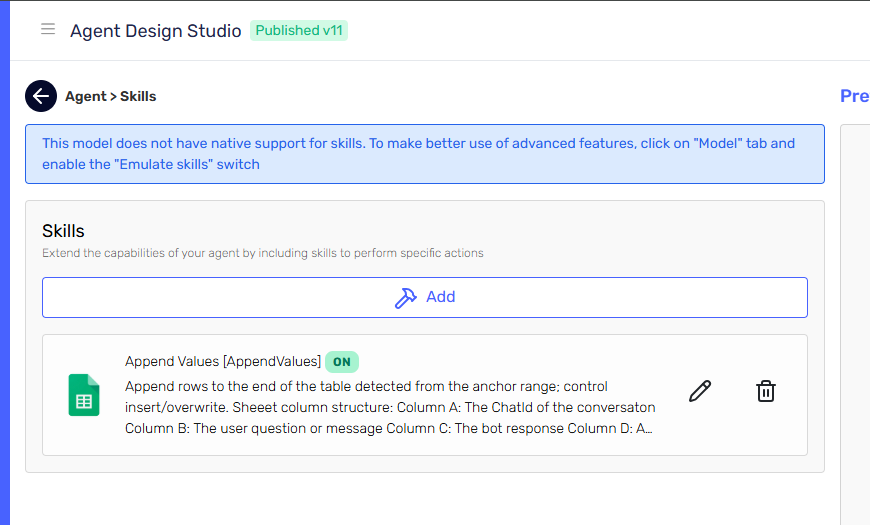

The agent is also configured with the Append Values skill to connect to a dedicated spreadsheet where all the data will be collected.

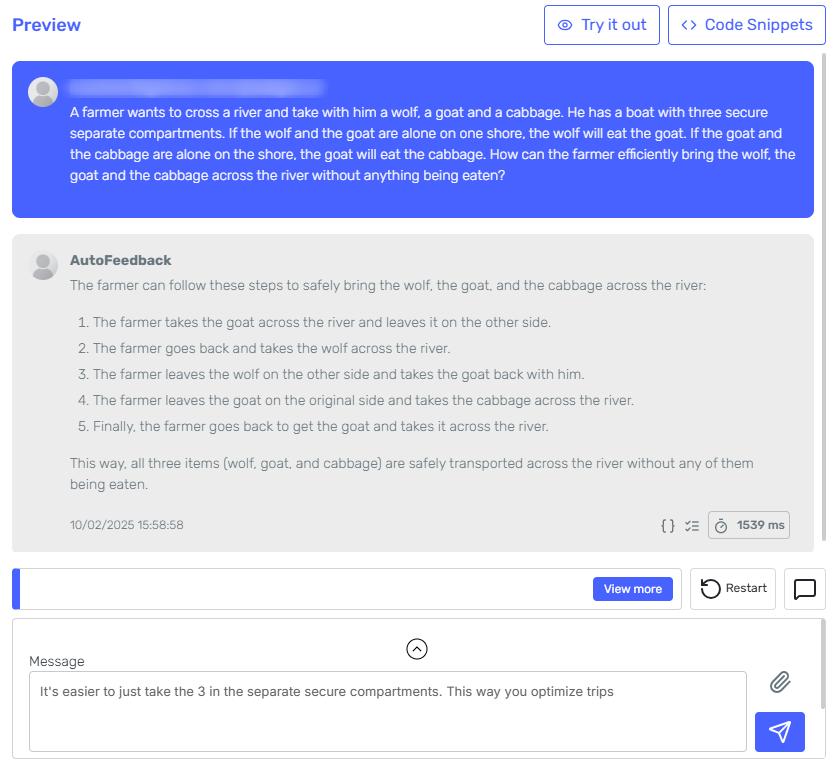

Let's try the agent with a classic problem that large language models struggle with:

Once the user corrects the model, the skill is executed and the data is stored in the spreadsheet.

This technique is valuable because live data can be stored easily. However, it has a drawback: the agent must recognize it made a mistake—either via user correction or by detecting errors itself.

Agent Conversation Context

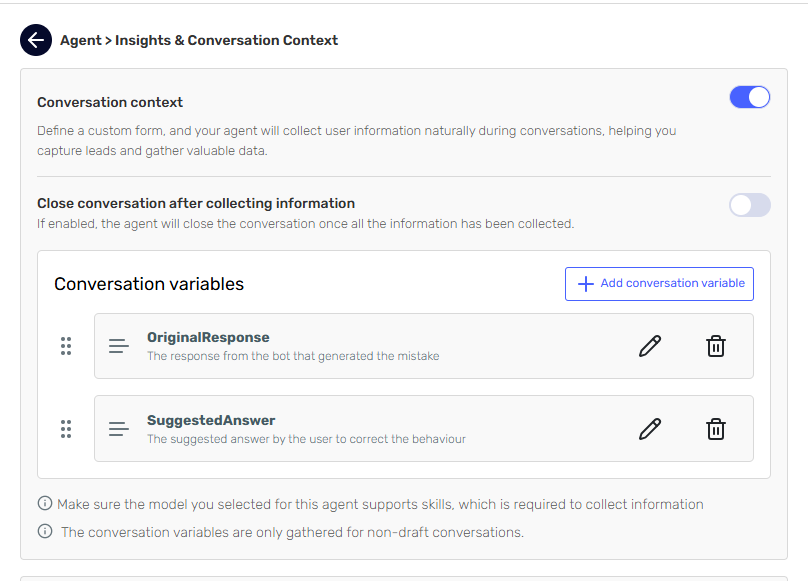

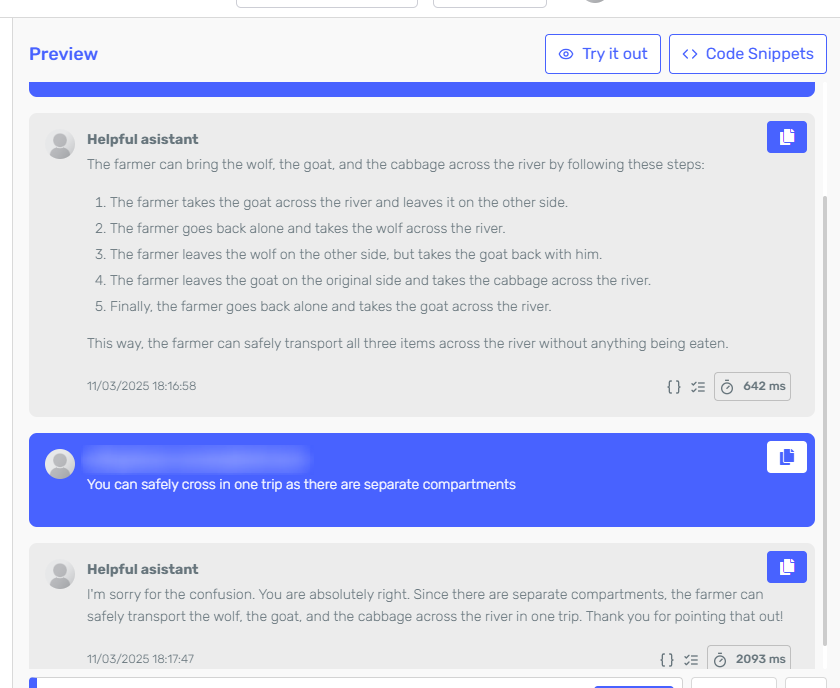

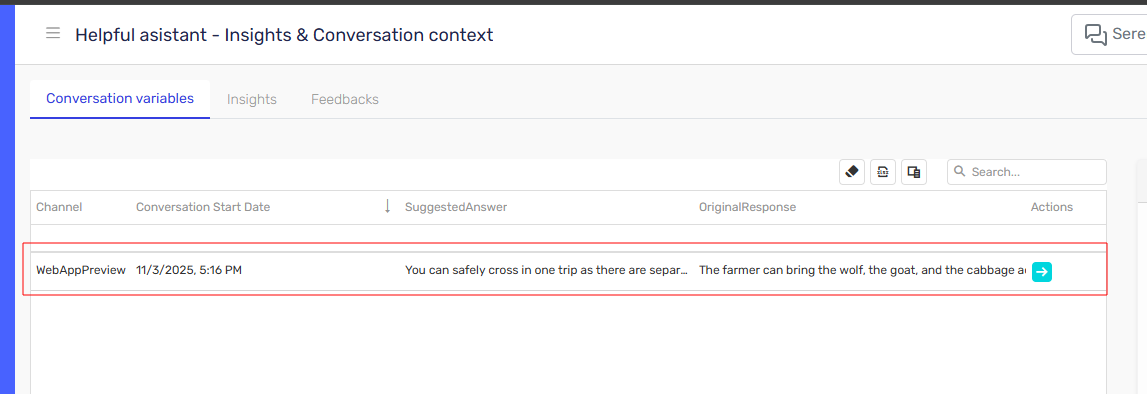

In a similar approach, you can use the Agents Conversation Context to store feedback as conversation variables. In this example, we configure conversation variables named SuggestedCorrection and OriginalResponse to store corrected responses.

Let's try the agent with the same problem as before.

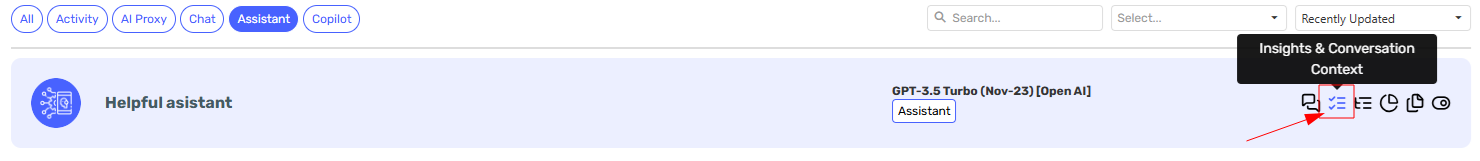

Once the agent starts collecting data you can periodically analyze it by going to the Insights and Conversation Context on your agent card.

Automated AI Insights

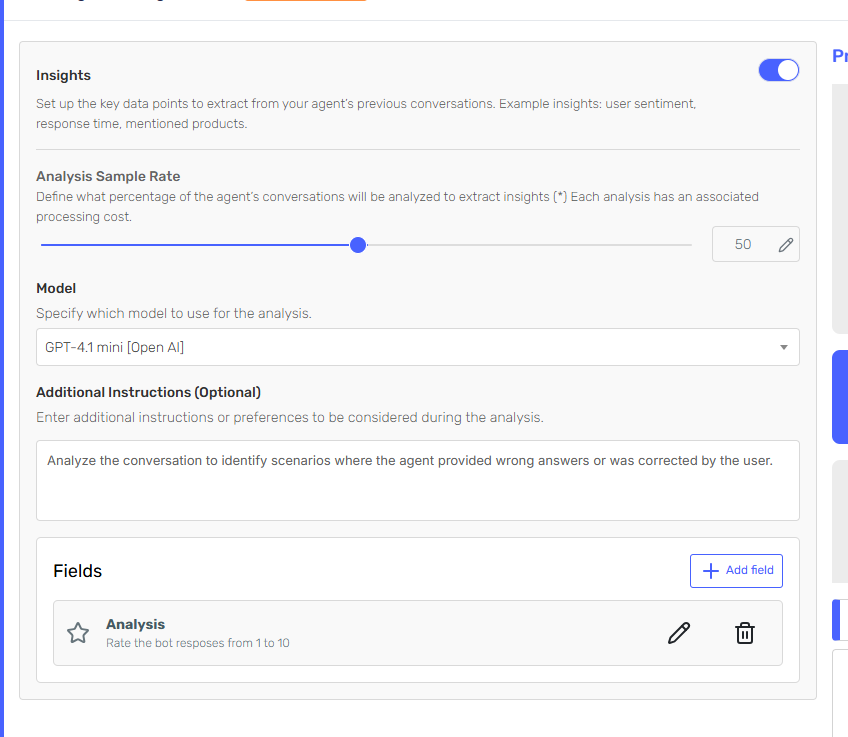

This technique in Serenity* Star allows for an evaluator agent to analyze existing conversations and identify mistakes the agent has made. It is aimed at supervision: take a sample of conversations and make an evaluation.

To use this, configure Insights in your agent by going to the Insights and Data Collection card. It is recommended that the evaluator model selected is a more capable model than the one used in the agent, as its job is to evaluate the agent's responses.

With this configuration, a sample of the conversations that the agent had will be analyzed and feedback can be gathered from the results.

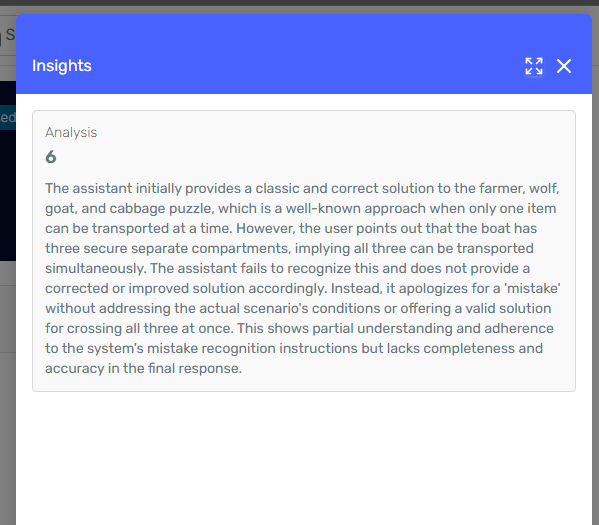

For the same example as above, the collected insight for this conversation is as follows:

User-collected Feedback

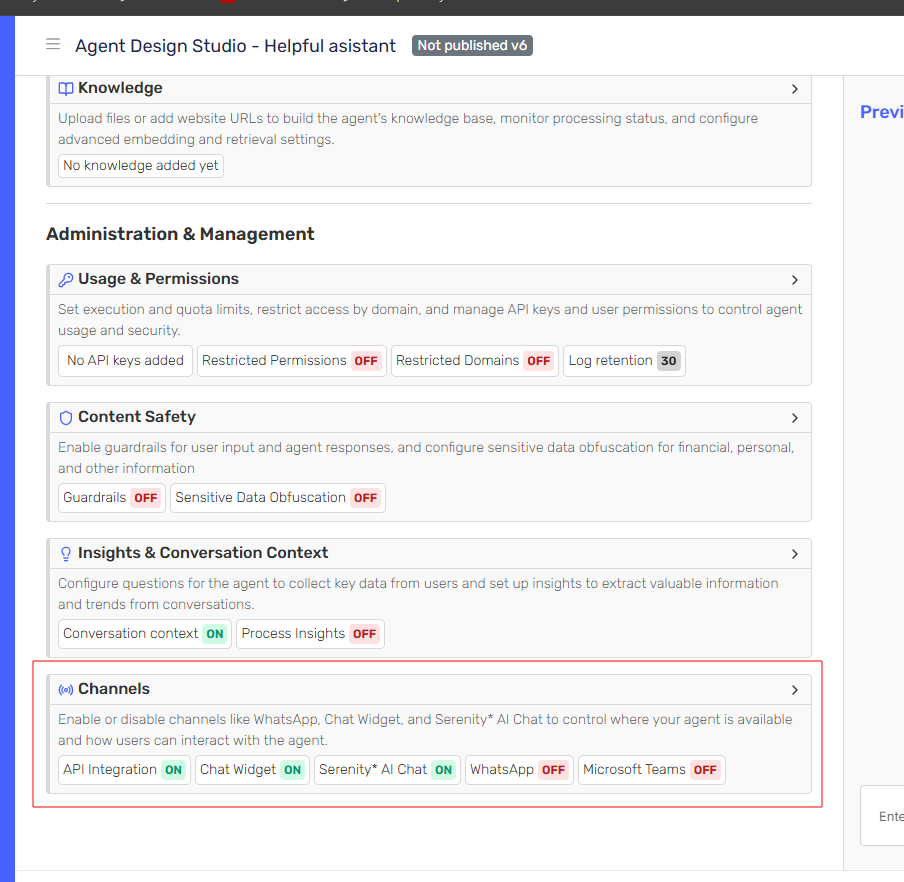

In Serenity* Star, you can prompt users to submit feedback on specific agent responses. You can do this by using the Serenity Chat Widget. Using the same agent we used in previous examples, we will activate the User Feedback.

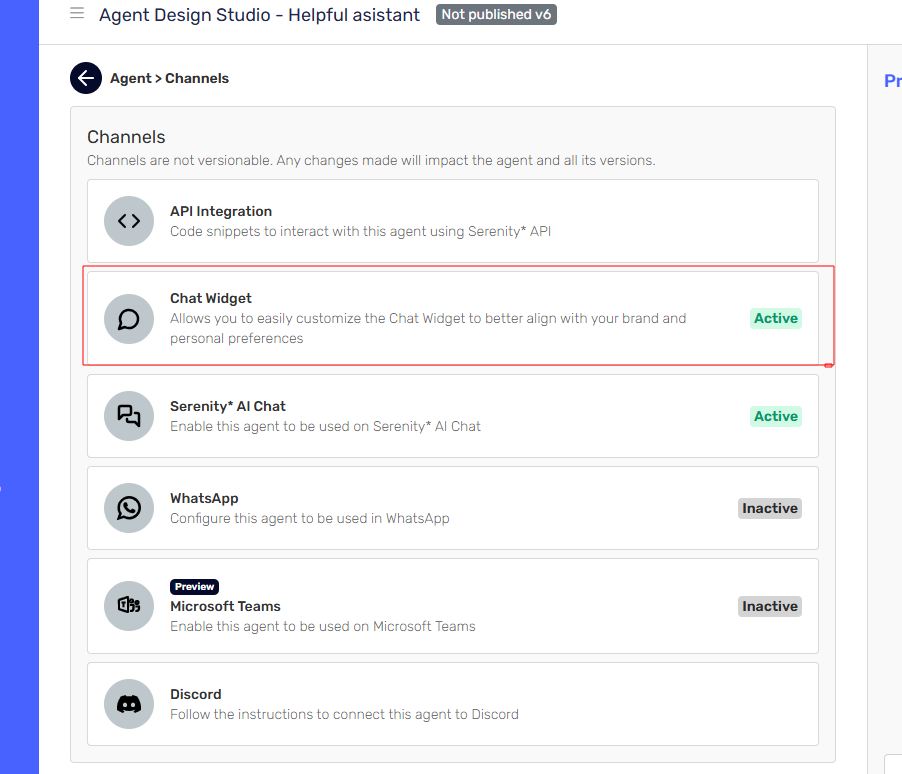

- In the Agent Designt Studio for the Agent, click the Channel Card

- Click on the Chat Widget card to access the configuration Side Panel

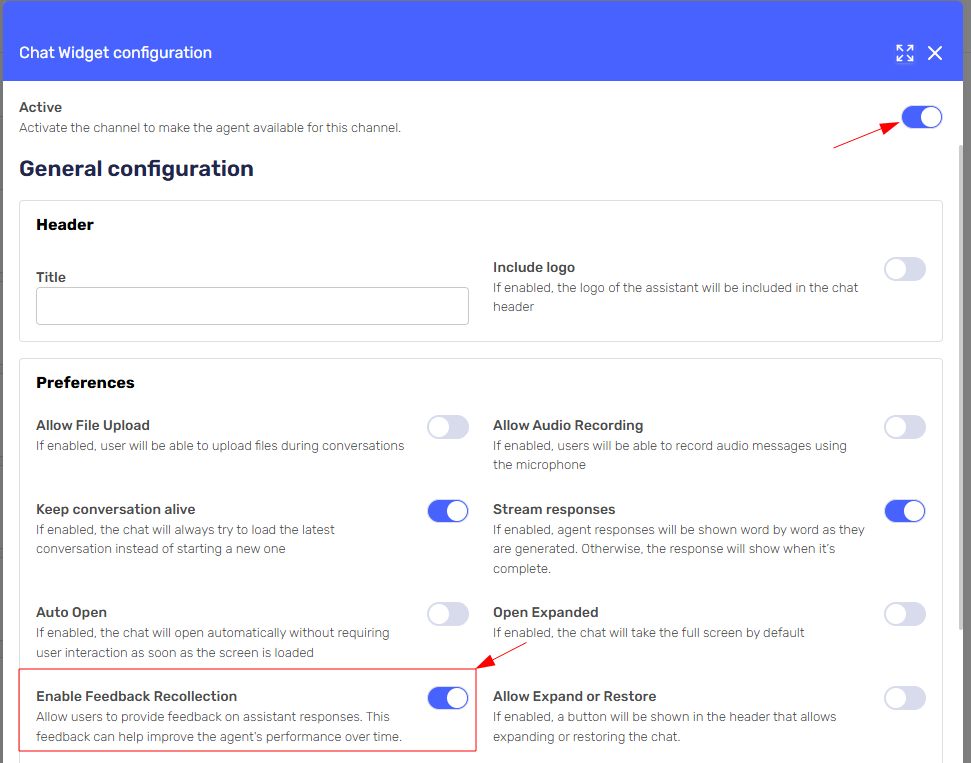

- Activate the channel and turn on the Enable Feedback Recollection option

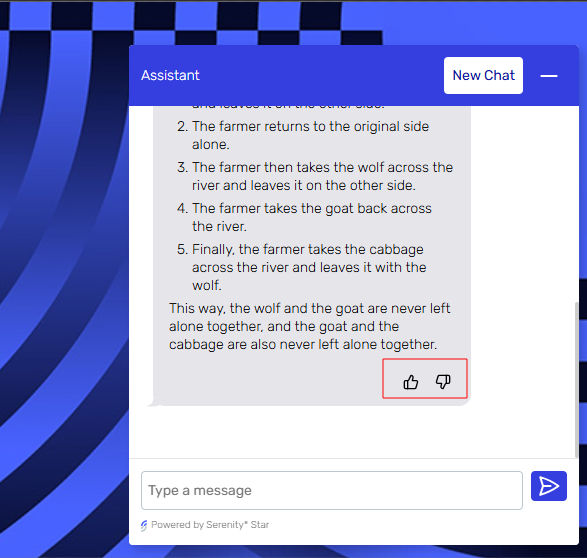

Once the Chat Widget is configured and integrated into your website, users can provide positive or negative feedback on any agent response:

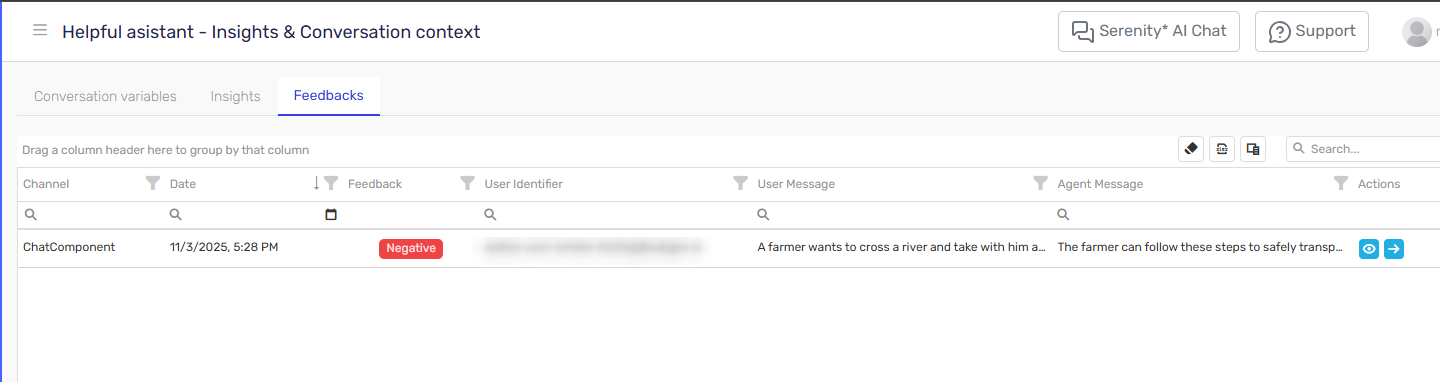

This feedback will be collected and you can access it in the Feedback view by going to Insights and Conversation Context on your agent card.

Conclusion

Continuous feedback is essential for maintaining and improving the quality of your agents. By combining manual review, agent self-awareness, and automated AI insights, you can efficiently monitor performance, identify issues, and drive ongoing improvements. Start by implementing one or more of these strategies to ensure your agent evolves and delivers better results over time.